Our latest AI Pulse survey, taken by 64 listeners of The Artificial Intelligence Show, reveals a complex split in how professionals are processing the commercialization of AI.

While a slight majority are resigned to the arrival of ads in ChatGPT, a significant portion of the audience signals that monetization could cost OpenAI their trust and potentially their business.

However, the skepticism towards ads pales in comparison to the resistance facing autonomous AI agents. The data indicates that while professionals may tolerate ads on their screens, they are overwhelmingly unwilling to give AI agents free rein over their local files.

The Ad Tolerance Threshold

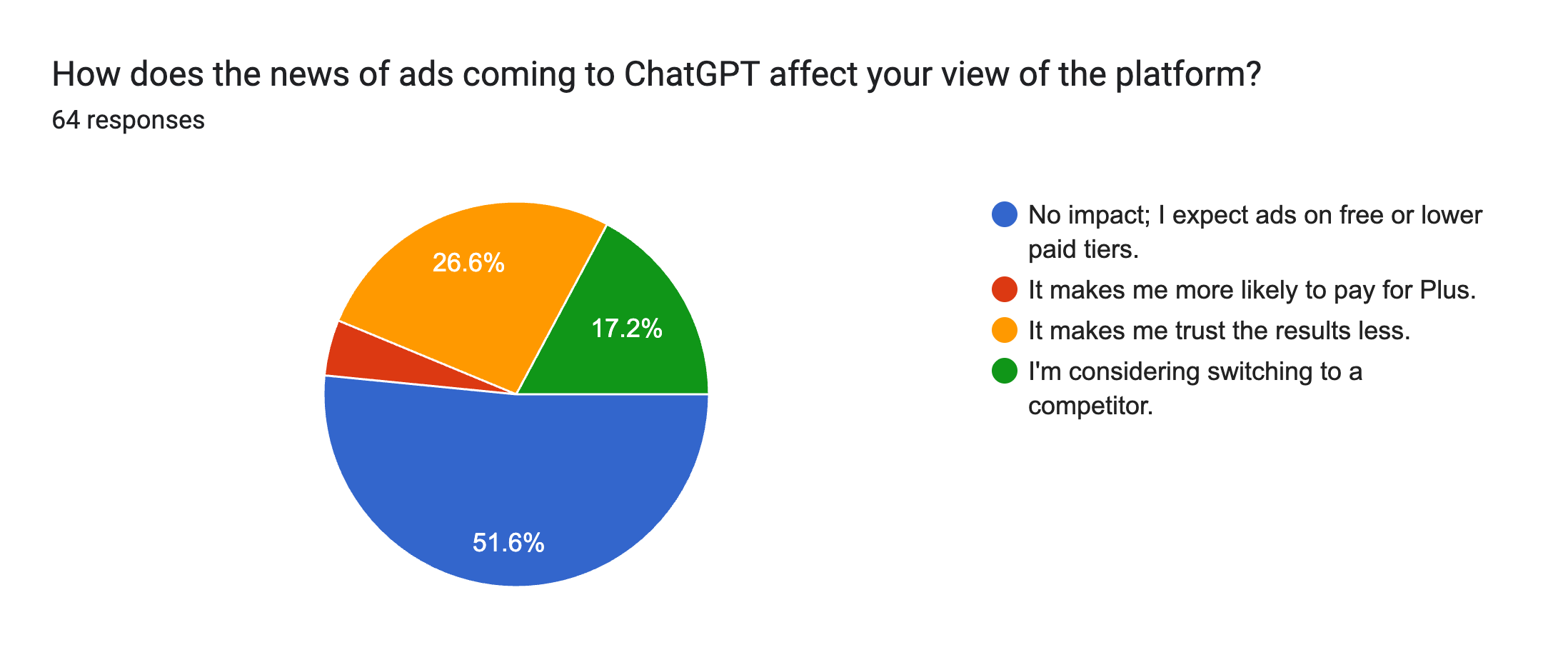

When asked how the news of ads coming to ChatGPT free and Go tier accounts affects their view of the platform, the response was mixed, highlighting a fragility in user loyalty.

A narrow majority—51.6%—said the news had "No impact; I expect ads on free or lower paid tiers." This suggests that for half the audience, the trade-off between free utility and advertising is accepted as standard internet economics.

But the remaining respondents expressed tangible concern.

- 26.6% said, "It makes me trust the results less."

- 17.2% said, "I'm considering switching to a competitor."

- 4.7% said, "It makes me more likely to pay for Plus."

When combined, 48.5% of respondents indicated a negative shift in sentiment. For nearly half the audience, the introduction of ads is actually a credibility issue, not just a nuisance If the perceived neutrality of the tool degrades, these users appear ready to explore alternatives.

The "Sandbox" Requirement for Agents

If ads are a friction point, file access is a blockade. We asked respondents if they were comfortable allowing an AI agent (like Claude Cowork) to read and edit files directly on their computer. The results show that unmitigated access is currently a non-starter for most professionals.

Only 10.9% of respondents said, "Yes, the productivity boost is worth it."

The vast majority demand strict guardrails:

- 50% said, "Only if limited to a specific folder."

- 28.1% said, "No, I'm not ready for that level of access."

- 10.9% said, "I need to see more safety reviews first."

This data suggests that the agentic future, where AI autonomously manages workflows across a desktop, faces a massive trust barrier. Just under 90% of users are currently unwilling to grant full drive access. The preferred model is clearly a "sandbox" approach, where the AI is contained to a single, safe environment rather than being given the keys to the entire machine.

ca

ca

Methodology and Audience Description

In our ongoing AI Pulse surveys, we gather insights from listeners of our podcast to get a sense of how our audience feels about various topics in artificial intelligence. Each survey is conducted over a one-week period, coinciding with the first seven days after an episode is released. During that time, our episodes typically receive around 11,000 downloads.

Our survey results reflect a self-selected sample of listeners who choose to participate, and typically we receive a few hundred responses. While this is not a formal or randomized survey, it offers a meaningful snapshot of how our engaged audience perceives AI-related issues.

In summary, when you see percentages in our headlines, they represent the views of those listeners who chose to share their opinions with us. This approach helps us understand the pulse of our community, even if it doesn’t represent a statistically randomized sample of the broader population.

Mike Kaput

Mike Kaput is the Chief Content Officer at SmarterX and a leading voice on the application of AI in business. He is the co-author of Marketing Artificial Intelligence and co-host of The Artificial Intelligence Show podcast.